Recently I was looking at building a sitecore search domain index (See Domain vs God index), which had quite a few calculated fields. Lots of the calculated fields were based off similar information about the parent nodes of the current item. And for each calculated field I was performing the same look ups again and again per field on the item.

I thought there has got to be a way to improve this, and found a forum post back from 2015 of someone asking the same question, and with a response of someone else who had solved it for one of the projects they were working on. Indexing multiple fields at same time

In the given answer it seems quite easy to override this method in the DocumentBuilder.

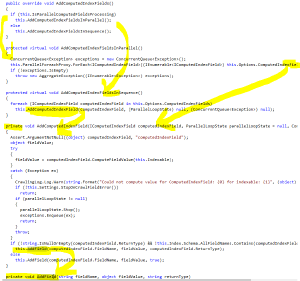

However using DotPeek it would appear somewhere since 2015 and Sitecore 8.2 update 6 the method which contains the logic I want to override has been made private, quite possibly when parallel indexing was introduced. As now the code forks in two, and both reference a private method which contains the logic I want to overwrite.

And that private method calls another private method. :/

Until Sitecore add in (or add back in) the extensibility points I need,

It’s Reflection to the rescue.

But reflection is slow, so let’s improve that performance by using Delegates (Improving Reflection Performance with Delegates)

Edit - it appears the SolrDocumentBuilder isn’t a Singleton, so moved reflection from constructor into a static constructor.

using System;

using System.Collections.Concurrent;

using System.Collections.Generic;

using System.Reflection;

using System.Threading.Tasks;

using Sitecore.ContentSearch;

using Sitecore.ContentSearch.ComputedFields;

using Sitecore.ContentSearch.Diagnostics;

using Sitecore.ContentSearch.SolrProvider;

using Sitecore.Diagnostics;

public class SolrDocumentBuilderCustom : SolrDocumentBuilder

{

private delegate void AddFieldDelegate(SolrDocumentBuilder documentBuilder, string fieldName, object fieldValue, string returnType);

private static readonly AddFieldDelegate _addFieldDelegate;

static SolrDocumentBuilderCustom()

{

var solrDocumentBuilderType = typeof(SolrDocumentBuilder);

var addFieldMethod = solrDocumentBuilderType.GetMethod("AddField",

BindingFlags.Instance | BindingFlags.NonPublic,

null,

new[]

{

typeof(string),

typeof(object),

typeof(string)

},

null);

_addFieldDelegate = (AddFieldDelegate)Delegate.CreateDelegate(typeof(AddFieldDelegate), addFieldMethod);

}

public SolrDocumentBuilderCustom(IIndexable indexable, IProviderUpdateContext context) : base(indexable, context)

{

}

public override void AddComputedIndexFields()

{

if (this.IsParallelComputedFieldsProcessing)

this.AddComputedIndexFieldsInParallel();

else

this.AddComputedIndexFieldsInSequence();

}

protected override void AddComputedIndexFieldsInParallel()

{

ConcurrentQueue<Exception> exceptions = new ConcurrentQueue<Exception>();

this.ParallelForeachProxy.ForEach<IComputedIndexField>((IEnumerable<IComputedIndexField>)this.Options.ComputedIndexFields, this.ParallelOptions, (Action<IComputedIndexField, ParallelLoopState>)((field, parallelLoopState) => this.AddComputedIndexField(field, parallelLoopState, exceptions)));

if (!exceptions.IsEmpty)

throw new AggregateException((IEnumerable<Exception>)exceptions);

}

protected override void AddComputedIndexFieldsInSequence()

{

foreach (IComputedIndexField computedIndexField in this.Options.ComputedIndexFields)

this.AddComputedIndexField(computedIndexField, (ParallelLoopState)null, (ConcurrentQueue<Exception>)null);

}

private new void AddComputedIndexField(IComputedIndexField computedIndexField, ParallelLoopState parallelLoopState = null, ConcurrentQueue<Exception> exceptions = null)

{

Assert.ArgumentNotNull((object)computedIndexField, nameof(computedIndexField));

object fieldValue;

try

{

fieldValue = computedIndexField.ComputeFieldValue(this.Indexable);

}

catch (Exception ex)

{

CrawlingLog.Log.Warn(string.Format("Could not compute value for ComputedIndexField: {0} for indexable: {1}", (object)computedIndexField.FieldName, (object)this.Indexable.UniqueId), ex);

if (!this.Settings.StopOnCrawlFieldError())

return;

if (parallelLoopState != null)

{

parallelLoopState.Stop();

exceptions.Enqueue(ex);

return;

}

throw;

}

if (fieldValue is List<Tuple<string, object, string>>)

{

var fieldValues = fieldValue as List<Tuple<string, object, string>>;

if (fieldValues.Count <= 0)

{

return;

}

foreach (var field in fieldValues)

{

if (!string.IsNullOrEmpty(field.Item3) && !Index.Schema.AllFieldNames.Contains(field.Item1))

{

_addFieldDelegate(this, field.Item1, field.Item2, field.Item3);

}

else

{

AddField(field.Item1, field.Item2, true);

}

}

}

else

{

if (!string.IsNullOrEmpty(computedIndexField.ReturnType) &&

!this.Index.Schema.AllFieldNames.Contains(computedIndexField.FieldName))

{

_addFieldDelegate(this, computedIndexField.FieldName, fieldValue, computedIndexField.ReturnType);

}

else

{

this.AddField(computedIndexField.FieldName, fieldValue, true);

}

}

}

}

You can then (as per the forum post referenced) return a List of Tuple’s from you computed index field, which all get added to the index in one go, without having to re-process shared logic for each field (assuming you have any).

var result = new List<Tuple<string, object, string>>

{

new Tuple<string, object, string>("solrfield1", value1, "stringCollection"),

new Tuple<string, object, string>("solrfield2", value2, "stringCollection"),

new Tuple<string, object, string>("solrfield3", value3, "stringCollection")

};

End Result

For my particular case with over 10+ calculated fields which could be combined,

I got index rebuild time down from 1 hour & 8 mins down to 22 mins on my local dev machine.

I then went on further to improve index rebuild times, by restricting which part of the tree the domain index crawls.

Seems I’m not the only one who’s indexes can benefit from this, and hopefully either sitecore will add support for this, or make it easier to extend again in the future without nasty reflection.

Happy Sitecoring!