Sitecore Log4Net Lockup - Hotfix & Don’t Share Appenders

This post is about a very rare elusive bug that caused the affected Sitecore instance to freeze up, as well not log anything which gave a clue what the problem was.

It was a combination of a bug in the Sitecore Log4Net code and additional Log4Net configuration that had been added (not OOTB) that caused Sitecore to lock up.

If you haven’t seen this issue, where a Sitecore instance locks up and you needed to restart IIS/Server, you probably won’t need this hotfix, but hopefully will get this hotfix rolled into a future version of Sitecore, so you’ll get the benefit then of hopefully never seeing this bug.

The Log4Net configuration recommendations are worth following though should you add any bespoke configuration.

As I mentioned it was a rare bug, and could go months without seeing the issue surface.

The affected Sitecore instance performed publishing and indexing operations, so was quite busy with lots of threads.

This issue has been seen in multiple Sitecore versions, up to at least Sitecore 9.3.

To get to the bottom of this elusive bug required a memory dump of the affected server. Sometimes restoring service is more important so not always possible to get a memory dump.

Even once that was done, required diagnostics to be added to work out what was causing the lock up/what was null, and more waiting for the issue to re-occur.

What was locking up/What was null?

Looking in the Sitecore.Logging.dll

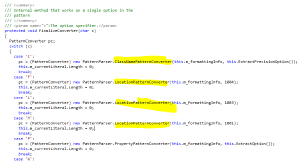

And the “PatternParser” class, you can see if you pass in any of %C, %F, %L, %M, %l in your log4net conversionPattern configuration, it will call either the “ClassNamePatternConverter” or the “LocationPatternConverter”:

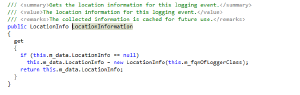

Both of these then make calls to get the Location information, which constructs a new “LocationInfo” instance.

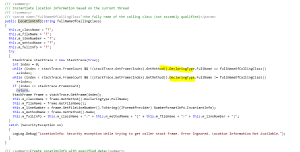

The constructor of the “LocationInfo” loops through the stack trace.

This code assumes that the DeclaringType is always going to be available, however it is possible that the stackFrame.GetMethod().DeclaringType might be null for lambda, which it was in our cases.

Possible workaround

One possible workaround before getting a hotfix would have been to not pass in these patterns which were causing the issues. However having the class name and location info of where an issue occurred is useful information.

Don’t share appenders

So Sitecore/Log4Net was failing to log when the stack trace had a Lambda which had a null DeclaringType.

Why was this causing Sitecore to hang?

Shouldn’t logging fail safe?

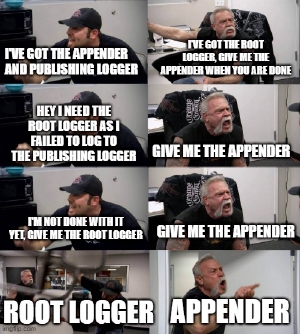

Well when a logger fails, it tries to log the issue to the root logger.

Our root logger was setup to also log to the same appender instance, also with the same ConversionPattern.

Due to the shared appender between loggers it was possible for deadlock to occur.

Setup/Key Facts

- RootLogger and PublishingLogger use the same appender instance

- DoAppend locks the appender which invoked it

- CallAppenders locks the logger which invoked it.

Scenario

- Publishing Logger call CallAppenders and DoAppend => gets the lock of Appender instance and PublishingLoggerObject

- RootLogger call CallAppeners and locks RootLoggerObject

- RootLogger calls DoAppender for Appender and tries to get the lock of the Appender Instance. But is already locked by PublshingLogger so has to wait until finishes.

- Whilst the PublishingLogger is trying to log, it gets an exception in LocationInfo, and so calls RootLogger to log the exception.

- RootLogger calls CallAppender and tries to get the lock of RootLoggerObject but is already locked

Deadlock

AzureFallbackAppender

Looking at App_Config\Sitecore\ExperienceContentManagement.Administration\Sitecore.ExperienceContentManagement.Administration.config

The AzureFallbackAppender is setup the same, for all the loggers to share the same appender.

“AzureFallbackAppender was designed specifically for Sitcore Support Package generator and it allowed us to collect the log files while generating the package. It was decided that only one AzureFallbackAppender remains for all kinds of logs, because of performance reasons.”

So whilst this isn’t recommended, the AzureFallbackAppender is a limited specific scenario, and issues haven’t been encountered with this.

Application Insights PAAS appender

Looking at the file in the Azure Websites project: App_Config\Sitecore\Azure\Sitecore.Cloud.ApplicationInsights.config

can see has the patch swaps out the default appends for the ApplicationInsights Log4NetAppender, so can see has a separate appender per logger as recommended.

So following the recommended practice.

Summary

- DeclaringType can be null for Lambdas

- Check for nulls

- Don’t share Log4Net appenders between loggers

Sitecore hotfix reference number

To monitor the progress of the related issue, please use reference number [437603]