Sitecore (Fast) Queries

There’s quite a few blog posts on avoiding using (fast) Sitecore queries on Content Delivery.

- https://blog.rauljimenez.co.uk/not-so-fast/

- https://intothecloud.blog/2018/10/21/It-s-time-to-put-fast-query-to-rest/

- https://blog.coates.dk/2014/11/19/sitecore-fast-query-syntax-can-kill-your-sql-server-or-website/

- http://intothecore.cassidy.dk/2016/05/sitecore-decennial-series-2-dont.html

Summary Fast always goes to the database, and even a normal Sitecore query can go from being a slow page, to bringing your site to it’s knees if that page is hit frequently enough.

I’ve had experience of this on inheriting a solution which would do three sitecore queries on a page load for calendar events, for items within a folder. Normally the page would load within a few seconds, not great but usable. However when a search engine got onto the page, and decided to crawl through the calendar navigation links, which are effectively infinite, the execution time grew and grew.

Until the page wouldn’t load in a couple of mins, .net thread pool limits were reached, and the site was effectively down.

The quick fix was to prevent these pages from being indexed, as well as indicate not to follow the calendar links forever.

The longer term fix was to switch to Content Search, using an index to load the content, to speed the page up (as well as keeping the indicators not to follow the infinite links).

(Of course using Sitecore Query for a few sub items, or getting an item by id is acceptable, but never Fast)

Link Database

So onto the topic of this post, the Link Database certainly has it’s place, finding references/referrers of items for one on Content Authoring being it’s primary usage.

There are some considerations before you start adding a dependency to the Links database in your code. (Hint, use Content Search were possible).

The Links Database, is more of a table which used to reside in the Core database by default until Sitecore 9, and then was moved to the Web database ahead of removing the dependency on the Core database.

We switched back to using the Core database to store the links again for reason I’ll come to shortly.

Scaling Limitation?

If you work in a Scaled environment though, you may have more than one Web or Core database. There can be only one link database(Table) maintained though. So in a multi-region scaled environment, you’ll be travelling across regions to the database with the maintained link database.

Now it might be with database replication that this problem gets resolved, and other regions can query a read only copy of a replicated database which contains the Link Database(table).

However we are avoiding using the Link Database on content delivery so we don’t encounter any scalability issues.

Rebuild Speed

Rebuilding the Link Database occurs in serial (one Databases links after the next get rebuilt in serial) and takes a long time. E.g, 8+ Hours. I understand from Sitecore support there are some experimental patches to speed up making the index rebuild faster/rebuild it in parallel, so far haven’t got around to trying these, but possibly an avenue worth pursuing.

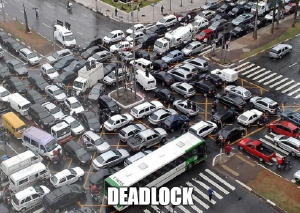

Deadlocks

I understand the situation used to be worse before Sitecore 7.1, were selects didn’t use the nolock hint, but still regularly see deadlocks on this table in production even on Sitecore 9.

SQL Server transaction deadlocks related to the link database could occur when multiple threads were creating and moving items concurrently, for example, when the threads created items that were stored in item buckets. This has been fixed by changing the GetReferrers(Item, ID) method in the SqlLinkDatabase class so that it uses WITH NOLOCK when reading from the Links table. (401393) 7.1 release notes

Bad Execution Plan

Sometimes when Sql Server evaluates a query on the Link Database(table), depending on the values being queried, it was deciding to do a TableScan to find an entry. This would get saved as the execution plan, and all the queries would go slower/use up more DTU’s, until the execution plan was discarded.

High DTU usage

We noticed that every publish, there was a DTU spike. We even saw some issues on the live site because of these Bad Execution Plan, combined with these High DTU spikes, on other pages trying to query the same database under load. So something had to be done, other than the immediate increase the DTU limits on the database server, and throw money at the problem.

Disable updating the link database on publish

The following setting cut down the DTU spikes we were seeing on every publish, as we don’t use the Links Database on Content Delivery, was a quick win for us.

Disables incremental updates to the link database during publishing operations as an experimental optimization to speed up publishing (25% on average).

Enabling Parallel Publishing and related optimizations

<setting name="LinkDatabase.UpdateDuringPublish" value="false"/>

Fixing the Bad Execution Plan

It was choosing to do a Table Scan, because unfortunately there was no covering index for the query, because one of the columns is of type “ntext” which prevents it from being part of the index.

Sitecore confirmed that according to MS documentation that ntext will be removed from future versions of Sql Server. So in the future this column type will change.

IMPORTANT! ntext, text, and image data types will be removed in a future version of SQL Server. Avoid using these data types in new development work, and plan to modify applications that currently use them. Use nvarchar(max), varchar(max), and varbinary(max) instead.

So we are currently trying out applying the following change to the Links Database(Table)

ALTER TABLE [Links] ALTER COLUMN TargetPath NVARCHAR(MAX) NOT NULL

GO

CREATE INDEX ndxLinks_SourceItemId_SourceDatabase_ALL ON [Links]

([SourceItemID], [SourceDatabase])

INCLUDE ([SourceLanguage], [SourceVersion], [SourceFieldID], [TargetDatabase], [TargetItemID], [TargetLanguage], [TargetVersion], [TargetPath] )

WITH (ONLINE=ON, SORT_IN_TEMPDB=ON)

And so far doesn’t appear to be causing much overhead to writes, is having the desired affect on preventing a bad execution plan and queries are using the new index, and to review if this has any beneficial impact to the deadlocks.

To update further once released to production/have more feedback. Hopefully these changes will come through in a future update of the sitecore product.

Summary

Be careful before taking a dependency on the Links Database, your architecture in the future if you are planning on moving to a scaled active/active multi region setup might not support it.

If you are using the Links Database on Content Delivery then you won’t have this luxury of being able to turn off this setting to not update the Web links database on publish. If you aren’t using the Links Database, then maybe turn off this feature to save some DTU’s.

If you are also seeing slow queries of the links database/high DTU’s, maybe try out this Sql Schema/Index and test yourself, or wait for this to become part of the sitecore product.

I’d recommend using Sitecore Content Search where possible.