Sitecore 9 Index Slow Down - fix

Tue Oct 30, 2018 in Development , Sitecore using tags Crawling , Sitecore , Solr , Indexing , Bug , UpgradeFollowing an upgrade to Sitecore 9.0 update 2, from Sitecore 8.2 update 6, spotted that index rebuilds of the default indexes Core, Web & Master were taking much longer than they were before.

Talking to rebuild these 3 index in parallel under 50 mins in Sitecore 8.2, now taking over 6 hours in Sitecore 9 (sometime 20 hours+), for ~1/4 million items in each of the web and master databases.

This was using the same SolrCloud infrastructure which had been upgraded ahead of the Sitecore 9 upgrade, same size VMs for sitecore indexing server, same index batch sizes & threads.

<setting name="ContentSearch.ParallelIndexing.MaxThreadLimit value="15" />

<setting name="ContentSearch.ParallelIndexing.BatchSize" value="1500" />

Looking at the logs could see they were flooded with messages.

XXXX XX:XX:XX WARN More than one template field matches. Index Name : sitecore_master_index Field Name : XXXXXXXXX

Initial discussions with Sitecore Support were to apply some patches to filter out the messages being written to the log files. bug #195567

However this felt more like treating the symptoms rather than the cause.

With performance still only being slightly improved, using reflection and overrides, tried to patch the behaviour in SolrFieldNameTranslator to not need to write theses warnings to the log files in the first place. Unfortunately the code had lots of private non virtual methods, and implemented an internal interface, which proved quite tricky to override, without requiring IL modification, so really was something for Sitecore to fix.

But even after all this, still around 4+ hours to rebuild the index on a good day.

I observed an individual rebuild of the Core index was quite fast on it’s own, ~5 mins.

But Sitecore Support confirmed that the algorithm used, would use resource stealing, to make the jobs finish about the same time each other (Slow job would steal resource from faster job).

And confirmed in Sitecore 8.2 update 6 all indexes were taking a similar time when run in parallel.

Work Stealing in Task Scheduler

Blog on Work Stealing

Resources on the servers, and DTU usage on the database were minimal. So didn’t appear to be maxing out.

So what was the issue, some locking, or job scheduling changed in Sitecore 9?

Well to find the answer some performance traces were required, from a test environment where could replicate this issue.

After enough performance traces were performed, Sitecore support observed that there were lots of idle threads doing nothing.

Which was odd on a server with 16 cores, and 15 threads allocated for indexing.

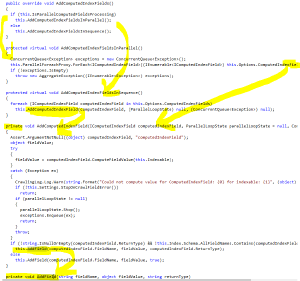

Sitecore support were then able to find the bug, The bug is specific to the strategy OnPublishEndAsynchronousSingleInstanceStrategy which was being used by the web index.

This strategy overrides Run() method and initialises LimitedConcurrencyLevelTaskSchedulerForIndexing singleton with the incorrect MaxThreadLimit value.

This code appears to be the same in previous versions, likely we were using onPublishEndAsync rather than onPublishEndAsyncSingleInstance before the upgrade.

Ask for bug fix #285903 from Sitecore support if you are affected by this, so your config settings don’t get overwritten.

Speeding up sitecore crawling

Sat Feb 10, 2018 in Development , Sitecore using tags Crawling , Sitecore , Solr , IndexingRecently I was looking at building a sitecore search domain index (See Domain vs God index), which had quite a few calculated fields. Lots of the calculated fields were based off similar information about the parent nodes of the current item. And for each calculated field I was performing the same look ups again and again per field on the item.

I thought there has got to be a way to improve this, and found a forum post back from 2015 of someone asking the same question, and with a response of someone else who had solved it for one of the projects they were working on. Indexing multiple fields at same time

In the given answer it seems quite easy to override this method in the DocumentBuilder.

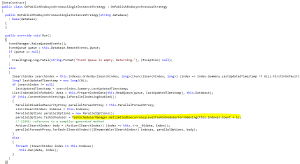

However using DotPeek it would appear somewhere since 2015 and Sitecore 8.2 update 6 the method which contains the logic I want to override has been made private, quite possibly when parallel indexing was introduced. As now the code forks in two, and both reference a private method which contains the logic I want to overwrite.

And that private method calls another private method. :/

Until Sitecore add in (or add back in) the extensibility points I need,

It’s Reflection to the rescue.

But reflection is slow, so let’s improve that performance by using Delegates (Improving Reflection Performance with Delegates)

Edit - it appears the SolrDocumentBuilder isn’t a Singleton, so moved reflection from constructor into a static constructor.

using System;

using System.Collections.Concurrent;

using System.Collections.Generic;

using System.Reflection;

using System.Threading.Tasks;

using Sitecore.ContentSearch;

using Sitecore.ContentSearch.ComputedFields;

using Sitecore.ContentSearch.Diagnostics;

using Sitecore.ContentSearch.SolrProvider;

using Sitecore.Diagnostics;

public class SolrDocumentBuilderCustom : SolrDocumentBuilder

{

private delegate void AddFieldDelegate(SolrDocumentBuilder documentBuilder, string fieldName, object fieldValue, string returnType);

private static readonly AddFieldDelegate _addFieldDelegate;

static SolrDocumentBuilderCustom()

{

var solrDocumentBuilderType = typeof(SolrDocumentBuilder);

var addFieldMethod = solrDocumentBuilderType.GetMethod("AddField",

BindingFlags.Instance | BindingFlags.NonPublic,

null,

new[]

{

typeof(string),

typeof(object),

typeof(string)

},

null);

_addFieldDelegate = (AddFieldDelegate)Delegate.CreateDelegate(typeof(AddFieldDelegate), addFieldMethod);

}

public SolrDocumentBuilderCustom(IIndexable indexable, IProviderUpdateContext context) : base(indexable, context)

{

}

public override void AddComputedIndexFields()

{

if (this.IsParallelComputedFieldsProcessing)

this.AddComputedIndexFieldsInParallel();

else

this.AddComputedIndexFieldsInSequence();

}

protected override void AddComputedIndexFieldsInParallel()

{

ConcurrentQueue<Exception> exceptions = new ConcurrentQueue<Exception>();

this.ParallelForeachProxy.ForEach<IComputedIndexField>((IEnumerable<IComputedIndexField>)this.Options.ComputedIndexFields, this.ParallelOptions, (Action<IComputedIndexField, ParallelLoopState>)((field, parallelLoopState) => this.AddComputedIndexField(field, parallelLoopState, exceptions)));

if (!exceptions.IsEmpty)

throw new AggregateException((IEnumerable<Exception>)exceptions);

}

protected override void AddComputedIndexFieldsInSequence()

{

foreach (IComputedIndexField computedIndexField in this.Options.ComputedIndexFields)

this.AddComputedIndexField(computedIndexField, (ParallelLoopState)null, (ConcurrentQueue<Exception>)null);

}

private new void AddComputedIndexField(IComputedIndexField computedIndexField, ParallelLoopState parallelLoopState = null, ConcurrentQueue<Exception> exceptions = null)

{

Assert.ArgumentNotNull((object)computedIndexField, nameof(computedIndexField));

object fieldValue;

try

{

fieldValue = computedIndexField.ComputeFieldValue(this.Indexable);

}

catch (Exception ex)

{

CrawlingLog.Log.Warn(string.Format("Could not compute value for ComputedIndexField: {0} for indexable: {1}", (object)computedIndexField.FieldName, (object)this.Indexable.UniqueId), ex);

if (!this.Settings.StopOnCrawlFieldError())

return;

if (parallelLoopState != null)

{

parallelLoopState.Stop();

exceptions.Enqueue(ex);

return;

}

throw;

}

if (fieldValue is List<Tuple<string, object, string>>)

{

var fieldValues = fieldValue as List<Tuple<string, object, string>>;

if (fieldValues.Count <= 0)

{

return;

}

foreach (var field in fieldValues)

{

if (!string.IsNullOrEmpty(field.Item3) && !Index.Schema.AllFieldNames.Contains(field.Item1))

{

_addFieldDelegate(this, field.Item1, field.Item2, field.Item3);

}

else

{

AddField(field.Item1, field.Item2, true);

}

}

}

else

{

if (!string.IsNullOrEmpty(computedIndexField.ReturnType) &&

!this.Index.Schema.AllFieldNames.Contains(computedIndexField.FieldName))

{

_addFieldDelegate(this, computedIndexField.FieldName, fieldValue, computedIndexField.ReturnType);

}

else

{

this.AddField(computedIndexField.FieldName, fieldValue, true);

}

}

}

}

You can then (as per the forum post referenced) return a List of Tuple’s from you computed index field, which all get added to the index in one go, without having to re-process shared logic for each field (assuming you have any).

var result = new List<Tuple<string, object, string>>

{

new Tuple<string, object, string>("solrfield1", value1, "stringCollection"),

new Tuple<string, object, string>("solrfield2", value2, "stringCollection"),

new Tuple<string, object, string>("solrfield3", value3, "stringCollection")

};

End Result

For my particular case with over 10+ calculated fields which could be combined,

I got index rebuild time down from 1 hour & 8 mins down to 22 mins on my local dev machine.

I then went on further to improve index rebuild times, by restricting which part of the tree the domain index crawls.

Seems I’m not the only one who’s indexes can benefit from this, and hopefully either sitecore will add support for this, or make it easier to extend again in the future without nasty reflection.

Happy Sitecoring!

Logic App, Azure Function, Get Tweets Sentiment and Aggregate into an email

Fri Feb 9, 2018 in Development using tags Development , Azure , Azure Functions , Azure Logic AppsFun with logic apps, Azure functions and twitter

While studying for the Microsoft 70-532 exam, I wanted to take a look at Azure functions & Logics apps.

Having gone through this example “Create a function that integrates with Azure Logic Apps”

It left me with some questions on how to improve it. E.g. I don’t want to receive an email per tweet.

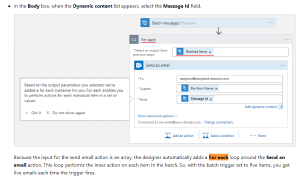

So after some searching, I came across a new feature called batching

“Send, receive, and batch process messages in logic apps”

but even after the batch had been reached,

each message in the batch would result in an individual email.

Then I came across this blog “Azure Logic Apps – Aggregate a value from an array of messages”

And the Compose feature was what I wanted. Composing first the message I want out of each tweet. Then combining those messages together, into the format I want to email.

I also wanted to make some improvements, to not get retweets, and filter tweets to the right language “How to Exclude retweets and replies in a search api” “How to master twitter search”

And here is the final result, twitter search result of original tweets filtered by language combined into a single email